The evolution of data orchestration: Part 1 - the past and present

This is part 1 of a two part article on the evolution of data orchestration. If you have already read this article, then check out “The evolution of data orchestration: Part 2 - the future”.

Data orchestration is one of the core building blocks of any data or ETL application and has existed for eons. In fact, data and workflow orchestration tools existed even as early as the IBM mainframe days (e.g. CA-7, which was acquired in 1987), and earlier.

Data orchestration tooling has evolved over the years, from Graphical User Interface tools like Control-M and SSIS, to code-first tools like airflow which makes it easier for developers to apply software development best practices.

The most recent evolution of data orchestration tools are in the areas of (1) code-first approach to enable software development best practices like version control and testing, and (2) the movement towards declarative programming languages like SQL (with dbt) to enable Analytics Engineers to create DAGs as part of their workflow (the person that interfaces between business and code to build data models that represents the real world).

Whilst the user interface, ease-of-use, and development practices of data pipelines has improved, the underlying design pattern of a data pipeline has not changed significantly. In this article, we’ll discuss the current design patterns for data orchestration, and then we’ll discuss a proposed design pattern for the future of data orchestration.

What is data orchestration?

Before we go any further, let’s take a minute to define data orchestration.

I define data orchestration as the service that is responsible for the execution of other data services (or software defined assets within a service) in a specified order: data integration, data transformation, reverse ETL, BI cached dataset refresh, ML model batch predictions or re-training.

Think of data orchestration as the General on a battlefield who is responsible for commanding their pieces (pawn, knight, bishop) to perform their assigned tasks.

A data orchestration service contains many data pipelines, where each pipeline is some kind of workflow definition. For example:

Ingest data to the warehouse → transform data in the warehouse → refresh BI cached dataset

Ingest data to the warehouse → transform data for ML feature engineering → trigger batch ML model predictions

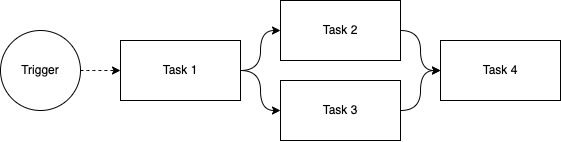

A data pipeline is typically built up of:

A trigger

One or more tasks (also referred to as nodes)

A Directed Acyclic Graph (DAG) to define dependencies between the nodes

The evolution of data orchestration patterns

Data orchestration patterns has evolved over the years. From rudimentary schedulers to DAGs. Let’s take a short walk down memory lane before discussing the future of data orchestration.

Gen 1 data orchestration (the past): flight schedule

What is it?

Tasks are triggered at specified intervals, without consideration on whether its upstream task has completed or not. To allow for room in case an upstream task takes longer than usual to complete, a time buffer is added between tasks.

This is similar to how flights are scheduled today. A plane departs Sydney and lands in Perth. There is a 20 minute layover before the flight departs from Perth to Bali.

Limitations

This is a rudimentary design pattern and the biggest limitation of this pattern is Task delays.

In the scenario where Task 1 is takes longer than usual to run, Task 2 would receive stale data inputs and would consequently output stale data its downstream task. This causes a chain effect where all downstream Tasks after Task 2 (Task 3 → Task N) would process stale data, thereby rendering the entire pipeline execution moot.

This is the equivalent of the Sydney to Perth flight arriving late at the Perth airport, and then Perth to Bali flight departing without any passengers.

Gen 2 data orchestration (the present): train schedule

What is it?

A Directed Acyclic Graph (DAG) is used to define dependencies between Tasks.

Task 4 will execute after Task 2 and Task 3 completes. A trigger is used to set off the data pipeline. The trigger is often a time-based trigger (e.g. 00:00 daily), or a sensor-based trigger (e.g. new files landing in S3).

This is similar to how train schedules run. On a train line, the train departs a station on a schedule. The train must travel between the stations on its train line in the pre-defined order (Station 1 → Station 2 → Station 3). The train cannot “jump” between stations because the train can only travel on tracks, with the tracks being analogous to DAGs.

This approach solves the problem of Task delays that we discussed in Gen 1.

In the example above, Task 4 will not execute until both Task 2 and Task 3 completes.

This is the de-facto design pattern used by most data orchestration tools today, such as airflow, dagster, prefect, and dbt (assuming you consider the DAGs for dbt models as subset of data orchestration without the trigger).

Limitations

There are two limitations with this design pattern: (1) the mono-dag, and (2) the dag of dags.

The mono-dag

To avoid circular dependencies and to execute Tasks in the order we want, we add more nodes to the DAG until the DAG eventually gets big and unwieldy. I have worked in organisations where a single DAG consists of thousands of nodes.

When you start to get to hundreds or even thousands of nodes in a single DAG, it can take a very long time to compile and test the DAG which slows down the development cycle. The second problem is ownership of the DAG. If the DAG fails, it is often up to the DAG owner to troubleshoot and either fix the failing Task themselves, or collaborate with the Task owner to fix the Task.

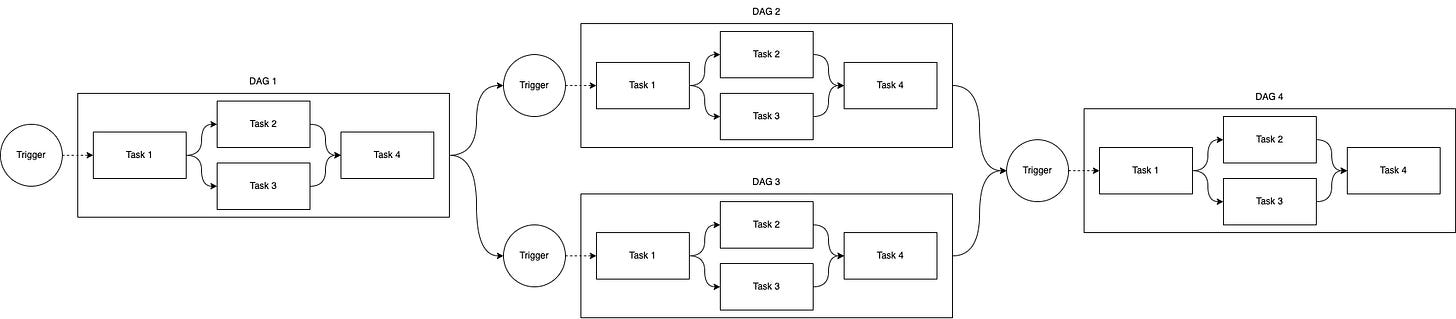

The dag of dags

To increase the pace of development cycles and provide clearer ownership, a DAG can be broken down into a DAG of DAGs. Each domain is responsible for creating and maintaining their own DAGs and configuring their own triggers (time-based or sensor-based).

The problem with the DAG of DAGs approach is creating dependencies between DAGs.

If we go with a time-based schedule to create dependencies between DAGs, we’re effectively going back to the Gen 1 pattern which isn’t ideal.

If we go with a sensor-based schedule i.e. trigger DAG 4 after DAG 2 and DAG 3 completes, then we’re not executing the nodes in the most efficient manner as we are not using the critical path.

For example, let’s assume that DAG_4.Task_1 is dependent on DAG_2.Task_2 and DAG_3.Task_3.

DAG_4.Task_1 could have triggered as soon as its upstream dependent tables, DAG_2.Task_2 and DAG_3.Task_3, are loaded.

However, since DAG 4 is dependent on DAG 2 and DAG 3, DAG 4 has to wait until both upstream DAGs are complete before DAG_4.Task_1 can execute.

DAG of DAGs tend to be less efficient than mono-dags, and therefore most businesses try to stick with mono-dags for as long as possible before exploring other options.

Conclusion

Data orchestration patterns have evolved over the years. However, with more and more analytical datasets being created everyday as a result of the rise of the analytics engineer, we are starting to experience bottlenecks quicker with our tightly coupled data orchestration design pattern.

In the next part, we discuss “The evolution of data orchestration: Part 2 - the future”.

Very insightful article. Thank you for sharing with the community your thoughts.